Bug #37893

openDuplicate hosts

Description

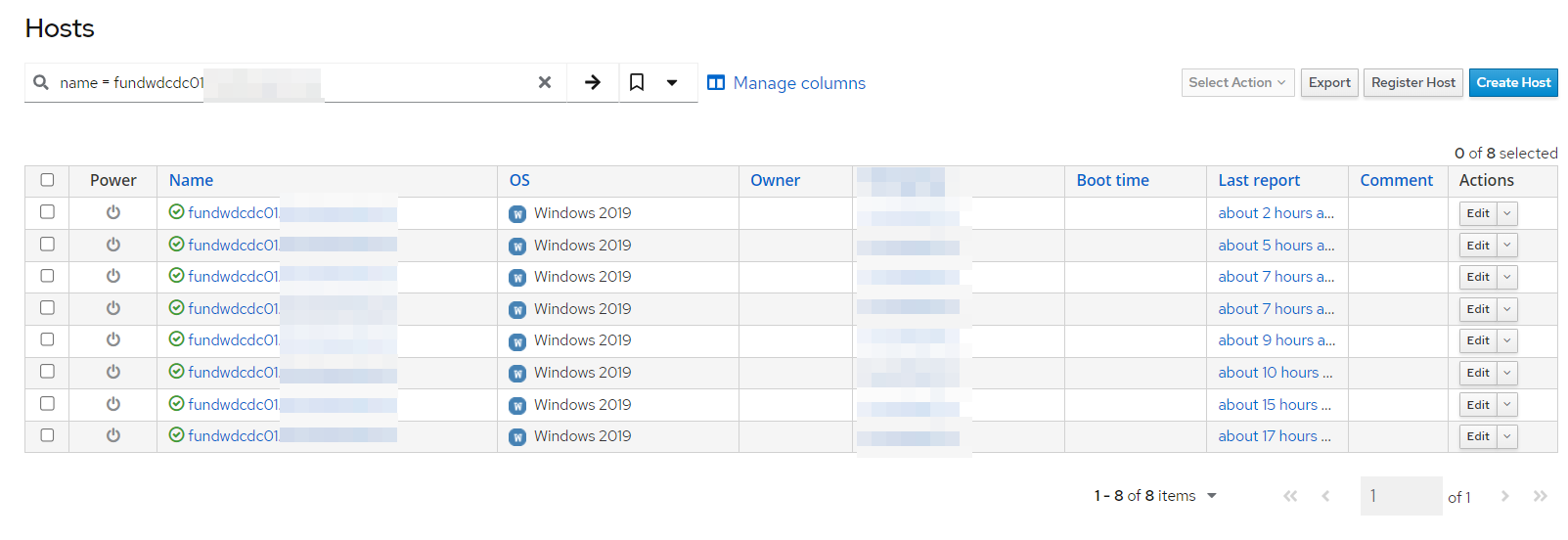

We're having an issue where we are getting duplicated hosts in Foreman. We dont use foreman for provisioning and hosts are only created via the salt_proxy/plugin API. I cannot for the life of me, find why they are duplicate. Same hostname, ip, mac address. I had one host duplicated 752 times. The only difference is a couple of facts (pid, system time, etc). Below is an example of a single host that has created itself 8 times in just a period of 10 hours. It does appear when looking at the host reports they all point back to the same report, independent of what actual host you click on.

How can I resolve this?

Foreman version: 3.10.0

Plugins:

- foreman-tasks 9.1.1

- foreman_default_hostgroup 7.0.0

- foreman_kubevirt 0.1.9

- foreman_puppet 6.2.0

- foreman_remote_execution 13.0.0

- foreman_salt 16.0.2

- foreman_statistics 2.1.0

- foreman_templates 9.4.0

- foreman_vault 2.0.0

Files

Updated by Jeff S 9 months ago

Updated by Jeff S 9 months ago

Loading production environment (Rails 6.1.7.7)

irb(main):001:0> hosts = Host::Managed.where(name: 'fundwdcdc01.fundrunner.local')

=>

[#<Host::Managed:0x0000785f55867770

...

irb(main):002:0> puts "Total count: #{hosts.count}"

Total count: 8

=> nil

irb(main):003:0> puts "IDs of hosts:"

irb(main):004:0> hosts.each { |host| puts host.id }

IDs of hosts:

10973

11949

12026

11858

11652

11186

11469

11699

=>

[#<Host::Managed:0x0000785f55867770

id: 10973,

name: "fundwdcdc01.fundrunner.local",

last_compile: Mon, 07 Oct 2024 20:53:06.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Mon, 07 Oct 2024 20:53:07.152096000 UTC +00:00,

created_at: Mon, 07 Oct 2024 20:28:22.784914000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>,

#<Host::Managed:0x0000785f556e8818

id: 11949,

name: "fundwdcdc01.fundrunner.local",

last_compile: Tue, 08 Oct 2024 08:23:07.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Tue, 08 Oct 2024 08:23:07.711870000 UTC +00:00,

created_at: Tue, 08 Oct 2024 08:13:02.744268000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>,

#<Host::Managed:0x0000785f556e8728

id: 12026,

name: "fundwdcdc01.fundrunner.local",

last_compile: Tue, 08 Oct 2024 11:53:07.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Tue, 08 Oct 2024 11:53:08.330681000 UTC +00:00,

created_at: Tue, 08 Oct 2024 11:25:50.719164000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>,

#<Host::Managed:0x0000785f556e8638

id: 11858,

name: "fundwdcdc01.fundrunner.local",

last_compile: Tue, 08 Oct 2024 06:53:07.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Tue, 08 Oct 2024 06:53:07.809075000 UTC +00:00,

created_at: Tue, 08 Oct 2024 06:27:59.031400000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>,

#<Host::Managed:0x0000785f556e8548

id: 11652,

name: "fundwdcdc01.fundrunner.local",

last_compile: Tue, 08 Oct 2024 04:53:06.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Tue, 08 Oct 2024 04:53:07.608600000 UTC +00:00,

created_at: Tue, 08 Oct 2024 04:43:56.081640000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>,

#<Host::Managed:0x0000785f556e8458

id: 11186,

name: "fundwdcdc01.fundrunner.local",

last_compile: Mon, 07 Oct 2024 22:23:06.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Mon, 07 Oct 2024 22:23:07.251764000 UTC +00:00,

created_at: Mon, 07 Oct 2024 22:14:09.136669000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>,

#<Host::Managed:0x0000785f556e8368

id: 11469,

name: "fundwdcdc01.fundrunner.local",

last_compile: Tue, 08 Oct 2024 03:23:06.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Tue, 08 Oct 2024 03:23:07.495806000 UTC +00:00,

created_at: Tue, 08 Oct 2024 03:02:42.632271000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>,

#<Host::Managed:0x0000785f556e8278

id: 11699,

name: "fundwdcdc01.fundrunner.local",

last_compile: Tue, 08 Oct 2024 06:23:07.000000000 UTC +00:00,

last_report: "[FILTERED]",

updated_at: Tue, 08 Oct 2024 06:23:07.627763000 UTC +00:00,

created_at: Tue, 08 Oct 2024 06:22:17.834070000 UTC +00:00,

root_pass: nil,

architecture_id: nil,

operatingsystem_id: 4,

ptable_id: nil,

medium_id: nil,

build: false,

comment: nil,

disk: nil,

installed_at: nil,

model_id: 3,

hostgroup_id: 5,

owner_id: nil,

owner_type: nil,

enabled: true,

puppet_ca_proxy_id: nil,

managed: false,

use_image: nil,

image_file: nil,

uuid: nil,

compute_resource_id: nil,

puppet_proxy_id: nil,

certname: nil,

image_id: nil,

organization_id: 1,

location_id: 10,

type: "Host::Managed",

otp: nil,

realm_id: nil,

compute_profile_id: nil,

provision_method: nil,

salt_proxy_id: nil,

grub_pass: nil,

salt_environment_id: nil,

global_status: 0,

lookup_value_matcher: "[FILTERED]",

pxe_loader: nil,

initiated_at: nil,

build_errors: nil,

salt_autosign_key: nil,

salt_status: nil,

creator_id: 1>]

Updated by Jeff S 9 months ago

Updated by Jeff S 9 months ago

This is absolutely killing our ability to use foreman. I am having to manually delete 1000s of duplicated hosts every morning.

Im not sure if this is a foreman issue or an issue within the salt proxy/plugin. I did find this is where salt is creating new hosts.

https://github.com/theforeman/foreman_salt/blob/master/app/services/foreman_salt/report_importer.rb

I have tried every combination of "create host when reports upload" and "create host when facts upload". Neither of those on or off fix the issue.

Updated by Jeff S 8 months ago

Updated by Jeff S 8 months ago

Per the forum post https://community.theforeman.org/t/unique-hosts-in-foreman-salt/39731/28 - we have given up on trying to figure this out. The report importer is still duplicating hosts, to the tune of 1000's per week.

Updated by Jeff S 8 months ago

Updated by Jeff S 8 months ago

This is becoming a major issue for us, as we are approaching 50,000 hosts. Would someone be so kind as to help me out? I now believe this to be a database issue.

Get the duplicates

irb(main):001:0> duplicates = Host::Managed

irb(main):002:0> .group(:name)

irb(main):003:0> .having('COUNT(*) > 1')

irb(main):004:0> .order(Arel.sql('COUNT(*) DESC'))

irb(main):005:0> .count

=>

{"10-222-52-104.redacted1.redacted2.global"=>2,

...

irb(main):006:0>

irb(main):007:1* duplicates.each do |name, count|

irb(main):008:1* puts "#{name} appears #{count} times"

irb(main):009:0> end

10-222-52-104.redacted1.redacted2.global appears 2 times

There are hundreds more, but lets take this first one.

Foreman Rake Inspect It

host2 = Host::Managed.find_by('LOWER(name) = ?', "10-222-52-104.redacted1.redacted2.global")

=>

#<Host::Managed:0x000079d8761c3860

### No other existing record:

irb(main):002:0> host = Host::Managed.find_by('LOWER(name) = ?', "10-222-52-104")

=> nil

Ok so we have what foreman thinks is one instance of this host.

However, SQL see’s:

foreman=# SELECT id, name, last_compile, updated_at, created_at FROM hosts WHERE name = '10-222-52-104.redacted1.redacted2.global'; id | name | last_compile | updated_at | created_at -------+------------------------------------------+----------------------------+----------------------------+---------------------------- 65492 | 10-222-52-104.redacted1.redacted2.global | 2024-10-29 18:00:11.174354 | 2024-10-29 18:00:11.925573 | 2024-10-29 00:36:05.240964 73105 | 10-222-52-104.redacted1.redacted2.global | 2024-11-07 19:00:11.566837 | 2024-11-07 19:00:12.353696 | 2024-11-07 00:17:45.039598 (2 rows)

2 records? For the same host…

And thus, we see duplicate hosts in the UI.

This issue is exacerbated by the fact we are approaching 50,000 hosts, and on our way to 100k. Little issues like this will compound.

Can anyone possible shed some light on what is happening here? Thank you for your consideration.